Raid 5 Software Windows

Up your speed by linking two or more drives in RAID 0For serious PC builders, speed is the name of the game. Too often, storage becomes a bottleneck that holds back even the beefiest CPU. Even with the advent of SSDs, leveraging a redundant array of independent disks (RAID) can drastically reduce boot and loading times. RAID 0 is the easiest way to get more speed out of two or more drives, and lets you use a pretty cool acronym to boot.In our test rig, we used a pair of Samsung 840EVOs with the latest firmware.RAID has several “levels” that use drives in different ways.

Level 0 (RAID 0) spreads or “stripes” data between two or more drives. The problem with striping data across drives is that when things go wrong, they go really wrong: If a single hard drive in a RAID 0 array fails and cannot be recovered, the entire RAID array is lost.On the plus side, RAID 0 combines the drives into a single larger logical drive with a capacity that is the sum of all the drives in the array. We found in our test rig that write cache stacked as well, which resulted in faster writing for large files. The data stored on the drives are read or written simultaneously, resulting in greatly reduced access times.There are three ways to implement RAID: hardware, software, and FakeRAID. Hardware RAID is faster, but it’s also more expensive due to the need for specialized hardware. Software and FakeRAID use the CPU in lieu of a dedicated RAID chip.Creating a software RAID array in operating system software is the easiest way to go.

Victor Ognisanti di Parerga is the richest young South Asian heir in the United States. He seems to be the ultimate narcissist, but is in fact quite the old-fashioned romantic at heart. Film top secret the billionaire 720p. His late, beloved father had set daunting conditions for anyone who wished to marry Victor. His first spouse had passed the test, but their marriage has since collapsed, and they are both seeing other people.

Windows 8 comes with everything you need to use software RAID, while the Linux package “ ” is listed in most standard repositories.The problem with software RAID is that it only exists in the OS it was created in. Linux can’t see a RAID array created in Windows and vice versa.

If you’re dual booting both Linux and Windows and need access to the array from both operating systems, use FakeRAID. Otherwise, stick to software. Prepare your hardwareTo ensure the best RAID performance, use identical drives with the same firmware. Mixing drive makes and models may work, but will result in faster drives being slowed down to match the slowest drive in the array. Don’t mix SSDs and mechanical drives in a RAID array; the SSD is faster on its own.RAID 0 doesn’t protect you from drive failure, so use new drives whenever possible.

When connecting your drives, make sure they’re all using the same SATA version as well.Before a drive can be used in a RAID array, it must be clear of filesystems and partitions. If you’re using old drives, make sure you get everything of value off of them first. You can remove any partitions with Disk Management on Windows or “gparted” on Linux.

If you’re using FakeRAID, the motherboard’s RAID utility should warn you before it wipes partition tables and the filesystems on them.In your operating system, you’ll need to have elevated permissions to create a RAID array. For Windows, you’ll need to be an Administrator. In Linux, you’ll need either the root password or sudo access.If you want to use FakeRAID, make sure your motherboard supports it. Be warned though: Installing an OS on top of a RAID 0 array can be really risky if your system data is critical. Windows: storage spacesCreating a software RAID 0 array on Windows is really easy, and relatively painless.

The thing is, Microsoft doesn’t call it RAID in Windows 8, opting for “storage spaces” and “storage pools” instead.Hit Win+S and search for “storage spaces” and open the utility. Next, click Create a new pool and storage space.

You’ll be prompted for administrator access. Click Yes to continue.Windows 8's built-in RAID software goes by the name 'Storage Spaces.' You’ll be greeted by a windows showing all the unformatted disks that can be used. Select all the disks you want in the array and click Create pool.To create a storage pool in Windows 8, the disks need to be unformatted.Next, give the pool a name and drive letter.

The name will appear as the drive label. Select NTFS as the filesystem. For Resiliency type, select Simple (no resiliency). This is the equivalent to RAID0. When you’re ready, click Create storage space to create the array.While a simple storage space technically only requires one hard disk, you need at least two for it to be a true RAID setup.If you want to remove a RAID array for any reason, simply click Delete next to the storage space you want to remove. To remove the pool, remove all of the storage spaces in it first.When you're all done, you'll be able to manage your storage spaces, check capacity, and monitor usage.See?

Told you it was easy. Next up, we're going to cover.

I'm not looking for advice on why I should not use software RAID, so please save your trouble:-PWhat kind of write performance have you achieved with software RAID5.WITHOUT cache!In my view benchmark with cache enable doesn't say anything and it not comparable.I'm getting write at 20MB/s on 3 x mechanical disks in both Intel RSTe and Windows 2016.pretty useless. With cache enabled I get several thousands MB/s.until the RAM cache is full:-PHave you seen better numbers and and how did you achieve that?Is it really not possible to get better performance?What about FreeNAS or Linux.any numbers? GUIn00b wrote:The other factors will be your CPU (because it's software RAID) and the bus. IF it's a Celeron on a SATA-1 bus, then it's going to be crap.

RPM of the spindle matters too.Since 2000, no mainline processor is noticeble in the chain. The slowest AMD64 processor you can buy this decade is faster than the disks can go. Since the Pentium IIIs in 2000 was released (32bit, single core) the main CPU has been so fast to basically never be a bottleneck.

That the CPU matters is really a legacy memory from the 1990s when that was still true, especially with Pentium and 486 procs where CPU binding was a huge deal.Today, software RAID is so fast at the CPU layer that all that matters is the speed of the drives. So was it the bottleneck:-The disks? Well they easily do 130MBs.there is a long way down to 20MB/s!-CPU?: CPU usage is rather low when I benchmark.should not be the issue.-RAM: I did specifically said no cache so it shouldn't play any role;-)-Controller: should easily handle it-Software RAID algorithm: I believe this is the problem? If someone can show mebetter numbers with FreeNAS or Linux then we we know:-PIt wouldn't be surprised if MS software RAID implementation is just that bad.If you don't disable cache when doing benchmark everything looks fine, at lease for a while until the cache is filled up (then it can drop as low as 2MB/s).and that usually take several GB testfile depending on RAM of course.But still no numbers:-(I'm using diskspd as benchmark tool, command:diskspd.exe -b128K -d10 -h -t1 -o32 -c500M -w100 c:io.dat. I've now read the::-PLook at raid 5 it says:'Parity RAID adds a somewhat complicated need to verify and re-write parity with every write that goes to disk.This means that a RAID 5 array will have to read the data, read the parity, write the data and finally write the parity.Four operations for each effective one. This gives us a write penalty on RAID 5 of four.

So the formula for RAID 5 write performance is NX/4. 'Doing a new write, what data does the RAID5-set have to read from the disks, the data is not yet on the disks! Don't get it?I understand it has to calculate parity etc of the data, but that has nothing to do with the RAID5 disks set yet, it's all done by the CPU/software before data is written to the disks. And again the CPU doesn't seem to be the bottleneck.But I do admit it fits fine with the benchmark: 130MB/s / 4 = 32MB/s. Yes, but a controller with RAM is costly, add another point of failure, use a lot of power: 14Watt! (which again create more heat in your server room, need more/faster cabinet fans which again add 2 more watts, The extra FANs create more vibrations that your disks don't like, and you can be in trouble if your controller dies and you can't get another quickly or cannot get it at all because it's absolute.

A dependency I can be without.A controller does have it's advantages, but it's not all heaven;-)It seem software RAID based on FreeBSD (NAS4FREE, FREENAS) or even basic RAID on Linux can give you good performance.I'm making a test-setup at the moment, I know soon if it is the way to go.cannot say yet. B-W wrote:Yes, but a controller with RAM is costly, add another point of failure, use a lot of power: 14Watt! (which again create more heat in your server room, need more/faster cabinet fans which again add 2 more watts, The extra FANs create more vibrations that your disks don't like, and you can be in trouble if your controller dies and you can't get another quickly or cannot get it at all because it's absolute. A dependency I can be without.A controller does have it's advantages, but it's not all heaven;-)It seem software RAID based on FreeBSD (NAS4FREE, FREENAS) or even basic RAID on Linux can give you good performance.I'm making a test-setup at the moment, I know soon if it is the way to go.cannot say yet. You can get a halfway decent card for sub-$300, and very solid ones at $500 depending your application.

14W is probably 1/10 of the TDP of a single processor. Adding 10% to the heat load when any good configuration is going to already have a fan blowing across the PCI-e bus or the risers is minimally more expensive.

And the fans should be mounted with rubber bushings, so vibrations aren't really an issue. If the controller dies, yes, you need a replacement.

You're adding another point of failure, but it's the same point of failure as the software layer that is handling your storage.You can get good or even excellent performance out of software RAIDs, but RSTe is not the way to go. Better would be something like mdadm. It's worth considering the opportunity cost to figure all of this out and test and deploy on your own versus buying a tested and reliable solution and moving on to other problems.

The server I have use 21 Watt idle! 45w at full thrust (which it never do IRL).SuperMicro server-class board with 2+1 NIC, 4 disks, i3 @ 3.7Mhz CPU with EEC support, AES instructions for your VM VPN firewall.

I run 5 VM servers and several users using terminal services on this little CPU.it just do the job:-P14W is not only a small increase. My server CPU do nothing 95% of the time.it can just as well do some RAID:-PIn my view software RAID is not another point of failure. I just restore the VM image and I'm good to go, even on ANY metal.Try to get another controller from your dealer in 15 minutes!You don't wanna have a server down for 1-2-3.14 dayes, so you have to buy two controllers, one for spare = double price!no thank you.Well, I still have to confirm if my SW RAID is actually useful, I'll get back with some numbers. I might have to eat my own words.we'll see:-P. Sean Wolsey wrote:My experience with software RAID has been that it's slower than hardware RAID.

This makes sense when you stop and think about the fact that software is another layer the data has to go through - a layer that requires CPU cycles. If you want the best possible performance, give up on the idea of using software RAID. Bite the bullet and invest in a good RAID card and good drives to go with it.That's not actually true.

Software RAID has been faster for 17 years now. And it doesn't have to go through another layer.

It goes to a CPU either way. But with software RAID it goes to a faster CPU, with hardware RAID it goes to a slower one. Software RAID is used for all of the biggest, fastest systems for a reason. Only in very small systems is hardware RAID even a thing. B-W wrote:The server I have use 21 Watt idle! 45w at full thrust (which it never do IRL).SuperMicro server-class board with 2+1 NIC, 4 disks, i3 @ 3.7Mhz CPU with EEC support, AES instructions for your VM VPN firewall. I run 5 VM servers and several users using terminal services on this little CPU.it just do the job:-P14W is not only a small increase.

My server CPU do nothing 95% of the time.it can just as well do some RAID:-PIn my view software RAID is not another point of failure. I just restore the VM image and I'm good to go, even on ANY metal.Try to get another controller from your dealer in 15 minutes!You don't wanna have a server down for 1-2-3.14 dayes, so you have to buy two controllers, one for spare = double price!no thank you.Well, I still have to confirm if my SW RAID is actually useful, I'll get back with some numbers. I might have to eat my own words.we'll see:-PI'm curious to see what you're able to achieve in the end.

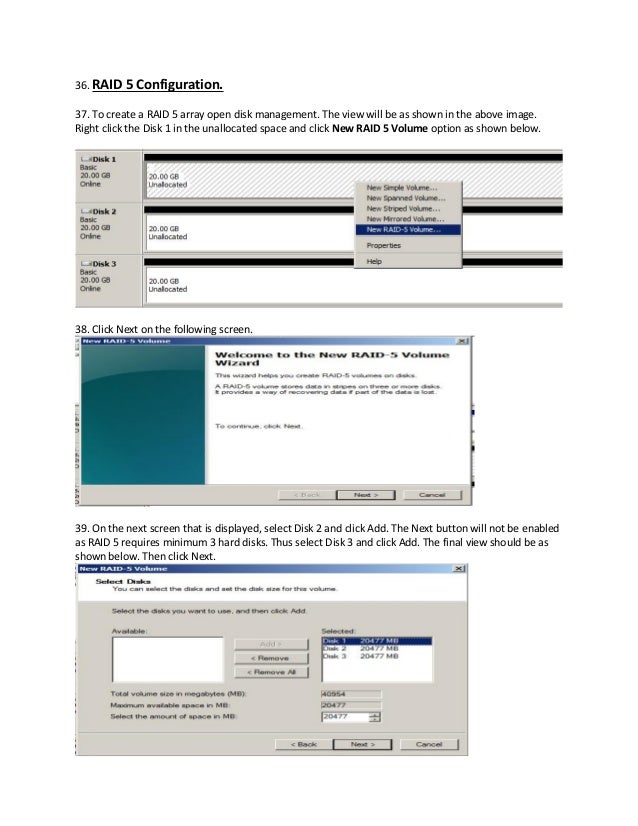

Raid 5 Windows 10 Pro

I'll eat my words slightly about the TDP of the smaller processors. I was thinking Xeons, but big beefy ones and not something comparable in core count to the i3 you're running.In a reasonable scenario slightly outside of the low end of SMB, if a RAID card fails, you can always grab an old server/card and import the config. It still requires having similar hardware and a hope, but realistically we're talking about restoring backups onto a different array anyway in the case of hardware failure. Status.I got NAS4FREE up and running as guest, created a RAID5 (3 disks) and sat it up as iSCSI target.

The whole Intel onboard SATA controller was passed through to the guest in ESX. Sean Wolsey wrote:My experience with software RAID has been that it's slower than hardware RAID. This makes sense when you stop and think about the fact that software is another layer the data has to go through - a layer that requires CPU cycles. If you want the best possible performance, give up on the idea of using software RAID. Bite the bullet and invest in a good RAID card and good drives to go with it.It depends (c).ZFS has no write hole 'by design' so RAID-Z(2) kills RAID5/6 on write performance, being comparable on reads. Hardware RAID controllers might have NVRAM write buffers so small spikes or pulsating I/O might get smoothed but.

Very small ones for a very short time:) With modern fast flash memory RAID controllers quickly become obsolete as you need to throw in multi-core ARM + FPGAs for faster write path processing. + NVMe flash got so heavy logic that RAID controller CPU really really really need to know how to work with their very deep multiple write queues = that's why NVMe RAIDs are rare. I now got a new test-setup up and running with software RAID5, getting 160MB/write and 250MB/read.YES!:-)(bear in mind this is equal to the physical write-speed of this HDD model)CPU i3 6100 (4 cores)Mobo: SuperMicro X11SSM-F (C236 chipset, 8xSATA)Additional disk: 2.M disk on NVMe PCI controller (for booting ESX and FreeNAS).Hypervisor: ESX 6.5U1NAS: FreeNAS, running as guest VM. Assigned 2 cores and 'only' 6GB RAMDisks: 3 x Seagate Enterprise 2.5' HDD 7200RPM SATAThe onboard Intel SATA controller has been set to passthrough to the FreeNAS VM.ESXi boots from the 2.M/NVMe disk. FreeNAS VM is also on this disk.I configured the 3 x HDDs to RAIDZ in FreeNAS and setup iSCSI.ESX was iSCSI initiator and I created a datastore on the iSCSI volume.I created a Win2016 VM with a virtual harddisk from the ESX iSCSI datastore, hence performance might be even better if you connect Win2016 directly to FreeNAS by iSCSI.But the speed I get going through the ESX datastore is so good, that I'll keep this setup.

The write speed on these seagate disk, 'directly' should be 160MB, so there seems to be not overhead.Copying a file from network to Win2016 was 110MB/S (1GB LAN).and very stable and I copied 23GB to make sure the cache was filled up.CPU usage:On the FreeNAS VM with 2 cores assigned: Both cores about 40% at full disk activity, e.g. Rebuilding or WRITEOn ESX: the two other cores, that's not assiged, about 2%.Additional advantages:It's impossible to get good SATA HDD performance on ESX connected directly. Disk latency goes through the roof 400ms and transfer drops to 1MB/sBut with this setup heavily disk activity is done without any latency:-)Another thing, these are 'modern' disk with 4K sectors that ESX doesn't support (unbeleivable).but now it does:-)I tried to disconnect one of the disks and everything worked fine so the RAID set works.